We discuss 12 user-friendly BigQuery SQL functions that Google Cloud just launched in our post on User-Friendly SQL Functions. One of these is the simplicity with which data may be exported from it to Google Cloud Storage (using SQL).

We mention in our post that BigQuery offers several FREE operations; one of them is the EXPORT DATA function. This got us wondering: how many experts are truly aware that these procedures are available for free? Surely sharing them would improve cost-effectiveness? We thus did.

If BigQuery is new to you

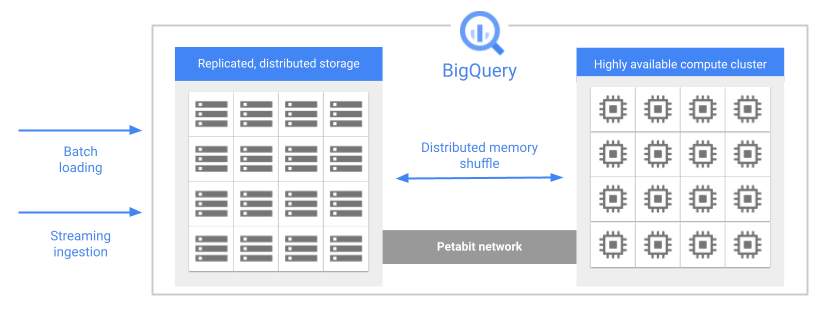

Google BigQuery is a cutting-edge hyper-scale Cloud data warehousing platform. Its ability to execute SQL queries over petabytes of data is made possible by Google’s superior network, storage, and processing infrastructure.

When you consider that you have access to computational capacity comparable to research-grade supercomputers, this is rather amazing. This tutorial explains how to load data from GCS to Big Query.

1. No ongoing fees

The first thing to keep in mind with BigQuery is that its price structure is consumption-based. You only pay for the data you store and scan (consume) using it, unlike some of the other options out there (such as Amazon Redshift and Snowflake). There’s no ongoing fee.

When you consider that you have access to computational capacity comparable to research-grade supercomputers, this is rather amazing. This tutorial explains how to load data from GCS to BigQuery.

2. The free BigQuery tier

For the majority of its essential services, including this, Google Cloud provides a substantial free tier. It provides the first 10Gb of storage and the first 1Tb of data scan for free as part of this Google Cloud Free Tier.

Many people have asked us if these carry over. While this isn’t the case, you can still try out services like BigQuery without spending a dime, 11+ Best Modern Data Integration Tools.

3. Adding information to BigQuery

Adding JSON, CSV, and Parquet files to BigQuery from Google Cloud Storage is a pretty simple process. We frequently develop federated tables in BigQuery that perform query-time operations on the files stored in GCS. This is not only simple but also free. In GCS, you simply have to pay for the data storage expenses.

Additionally, Data Transfer Service (DTS) provides daily FREE ingestion from several popular data sources. These consist of:

Data storage

- Google Cloud Storage

- Amazon S3

- Amazon Redshift

- Teradata

SaaS

- Google Campaign Manager

- Google Analytics 360

- Google Ads

- Google Ad Manager

- Google Merchant Center

- Search Ads 360

- YouTube Channel

- YouTube Content Owner

4. Making BigQuery table copies

The act of copying data is free. Both inside and between these projects are included in this.

5. BigQuery data export to Google Cloud Storage

This is a new capability (which we discussed in our previous post) that makes it simple to export BigQuery data to GCS via SQL. The good news is that just the storage expenses in GCS and BigQuery are incurred; there are no fees associated with the data transport component.

6. Work done by DDL

There are no fees associated with DDL operations like removing a table, dataset, or view. This encompasses the newly launched TRUNCATE function (yes, Google Cloud was undoubtedly lagging on this one). When emptying the contents of a table, we advise using TRUNCATE rather than deleting since deleting requires a scan of the rows that need to be removed. Partitions can also be deleted in this way.

7. Operations with metadata

A description is the most popular type of metadata that BigQuery provides and we advise all of our clients to save them against each table to expedite the process of data discovery. Viewing, updating, and deleting this metadata are all free of charge. Excellent for lowering the cost of possessing a data catalog.

For creating SQL data pipelines in BigQuery, we highly recommend using the data build tool (dbt), which has complete support for automatically updating table metadata. An excellent method for automatically documenting data assets.

8. Using pseudo-column queries

Pseudo columns are supported in some BigQuery tables. For instance, we often witness clients using Firestore’s built-in feature to export data to BigQuery. The process of creating cost-effective SELECT queries is facilitated by the automated assignment of the date as the _TABLE_SUFFIX to date shared tables that receive exported data. You are allowed to browse any bogus column.

9. Making Meta table queries

The following meta tables can be queried for free:

- __PARTITIONS_SUMMARY:

Used to extract partition metadata from a partitioned table that was either produced during ingestion or already partitioned.

- __TABLE_SUMMARY:

This function is used to get metadata from tables and views inside a dataset.

10. Functions Defined by the User (UDFs)

One of the nicest is perhaps the one we left for last; there is no fee to execute a UDF. Knowing that a UDF can carry out some rather sophisticated processing (as they support Javascript and its related libraries) is a very useful thing.

Conclusion

In conclusion, understanding that BigQuery has them should be quite beneficial in guaranteeing that data analytics solutions are constructed economically.