The act of getting and studying raw data from a database is known as web scraping. Numerous outstanding web scraping solutions have been produced by the Python community. The world’s most reliable source of both accurate and incorrect information is without a doubt the internet.

Data science, corporate analytics, and investigative journalism are just a few of the areas that may benefit greatly from the collection and analysis of website data. If you’re interested in learning the fundamentals of Google web scraping, this information will be helpful.

Web Scraping Applications

- It can be used to compare prices automatically.

- Review Climate Reports Extraction in Bulk Discontinuation

- Tracking Product Costs

- Basics of the Web Scraping API

- Analysis of Data

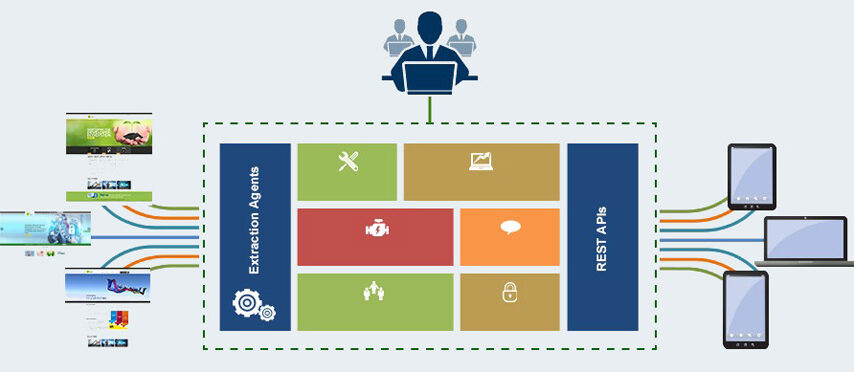

- Basics of the Web Scraping API

The web scraping python functions in two different ways, as follows:

Internet Website

HTML is used in the website page design (HyperText Markup Language). It has various tags that serve as storage for the text, images, videos, and hyperlinks that make up the full page. Every HTML tag has a specific purpose.

CSS is used to offer the website’s visual component (Cascading Style Sheets). Two of the most crucial technologies for building Web pages are HTML and CSS. JS is a crucial and commonly used language in all modern websites, in addition to HTML and CSS.

JavaScript (JS) is a computer language that enables dynamic behavior and interactivity in web pages. Users can interact with a variety of web components, including buttons, forms, navigations, and so on, and generate specific behavior based on their interactions without having to reload the page again. A good web page is made when HTML, CSS, and JS are merged.

Scraping the web

Web scraping operates in the opposite direction from how a web page is structured. We can gather any information from the web using CSS filters and the built-in capabilities of the library. What if you needed to scrape Amazon’s product prices, for instance?

To establish a connection between the Python code and the Amazon servers, an HTTP request is first submitted. Once the connection is established, you will need to employ a scraping library to gain access to the internet’s source code.

The next step is to identify the correct class, id, or tag where the product’s pricing is saved after the source code has been archived. As soon as the selector is discovered, the data is extracted using the built-in functions.

Most Effective Python Libraries For Web Scraping

Beautiful Soup

You may parse documents in HTML and XML with the help of the Python utility called Beautiful Soup. One of Python’s newest and most fundamental libraries is this one. To retrieve data from Source’s multiple parsed pages, Beautiful Soup creates a parse tree (HTML).

To retrieve data from Source’s multiple parsed pages, Beautiful Soup creates a parse tree (HTML).

Selenium

Python’s Selenium module helps with the automation of browsers for a variety of tasks. One of the most common tasks carried out by this library is web scraping. It can easily extract automated javascript text.

Scrapy

Python’s Scrapy framework allows for extensive web scraping. It includes every tool you might need to extract data from the database. If you wish to carry out a sizable project, like scraping thousands of website pages, Scrapy is a fantastic choice.

Final Thoughts

I hope you now have a solid grasp of the web scraping API foundations. The python libraries you can use for web scraping have been listed above, and it works. You can contact the websites offering web scraping services. You may use Zenscrape, one of the top online scraping and api platforms, to perform extensive web scraping without worrying about being restricted. Come today to take advantage of our extensive web scraping services.